In this talk, I will share our journey behind developing an effective long-context language model. I’ll begin by introducing our initial approach of using parallel context encoding (CEPE). Despite achieving significant efficiency gain and promising perplexity, we found that CEPE often struggled on basic synthetic tasks. This motivated us to create HELMET, a comprehensive and reliable benchmark for long-context LMs, featuring a diverse range of tasks and developer-friendly features. Using HELMET and insights from CEPE, we conducted extensive ablation studies on data mixture, scaling, instruction tuning, and position extrapolation. This led to ProLong, a top-performing 10B long-context model with an effective context length of 512K.

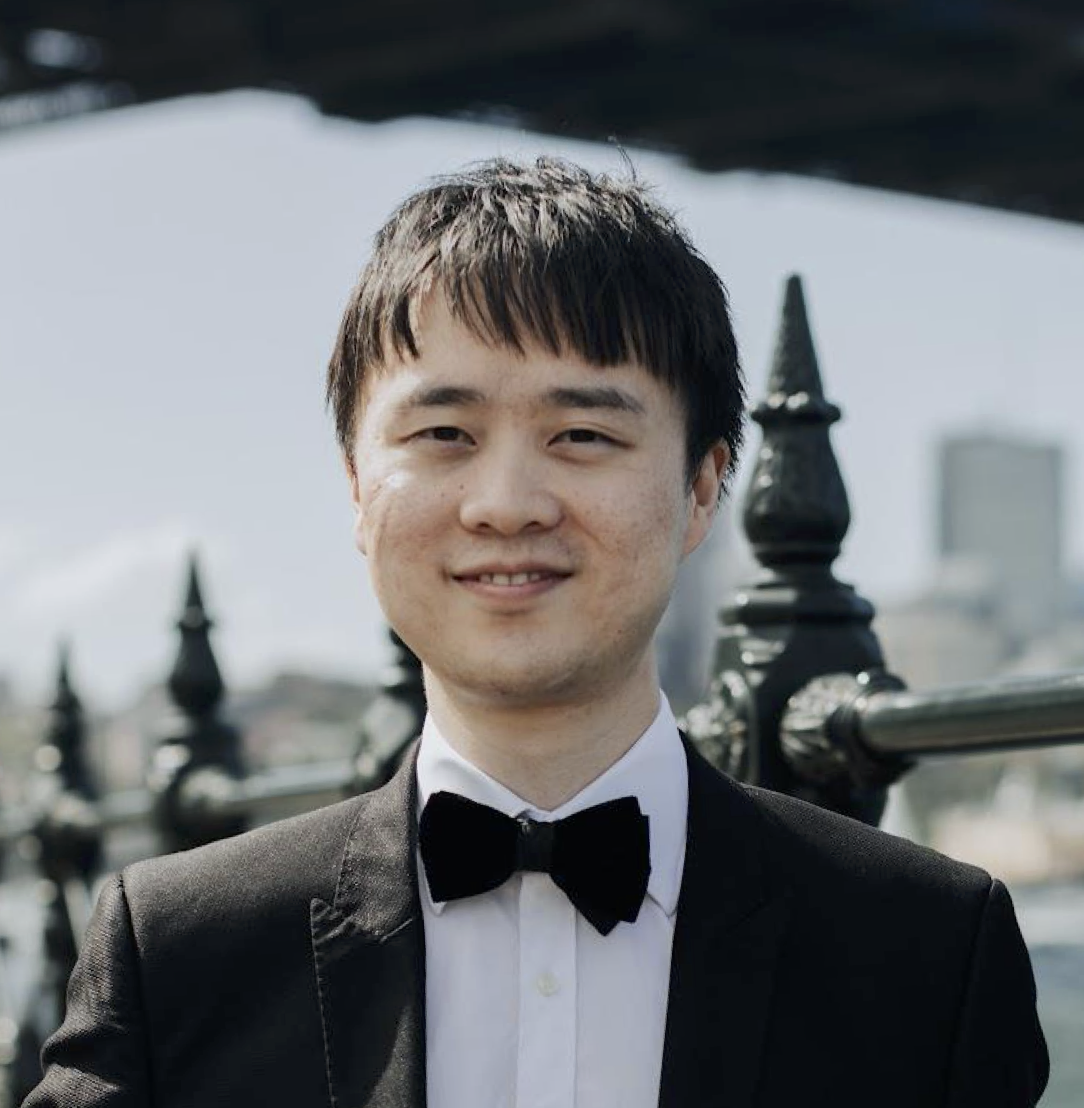

Speaker Bio

Tianyu Gao is a 5th-year PhD student at Princeton University advised by Prof. Danqi Chen. He is interested in building capable, reliable, and efficient language models, with a current focus on long-context LMs and instruction following. He co-organized the first workshop on long-context foundation models at ICML 2024. He received an IBM PhD Fellowship in 2023 and an ACL Outstanding Paper Award in 2022.

More Details

- When: Wed 4 Dec 2024, at 1 - 2 pm (Brisbane time)

- Speaker: Tianyu Gao (Princeton University)

- Host: Ruihong Qiu

- Zoom: https://uqz.zoom.us/j/84022245453 [Recording]

No.24-17 Mitigating Distribution Shifts in Using Pre-trained Vision-Language Models

No.24-17 Mitigating Distribution Shifts in Using Pre-trained Vision-Language Models