Despite the excellent performance of LLMs across various output-based benchmarks, we argue that focusing solely on outputs overlooks both underlying risks and untapped opportunities within their internal representations. This talk explores how structured representation learning can advance model interpretability, robustness, and reasoning capabilities by examining the latent space where LLMs truly “think”. We address two core questions: First, how far can we understand and control these models through their internal representations? Second, how can we leverage structured latent representations to build self-improving LLM paradigms? By identifying and manipulating interpretable model internals, we demonstrate emerging approaches for enhanced model steerability, effective reasoning trainings — ultimately enabling more transparent and automated reasoning systems.

Speaker Bio

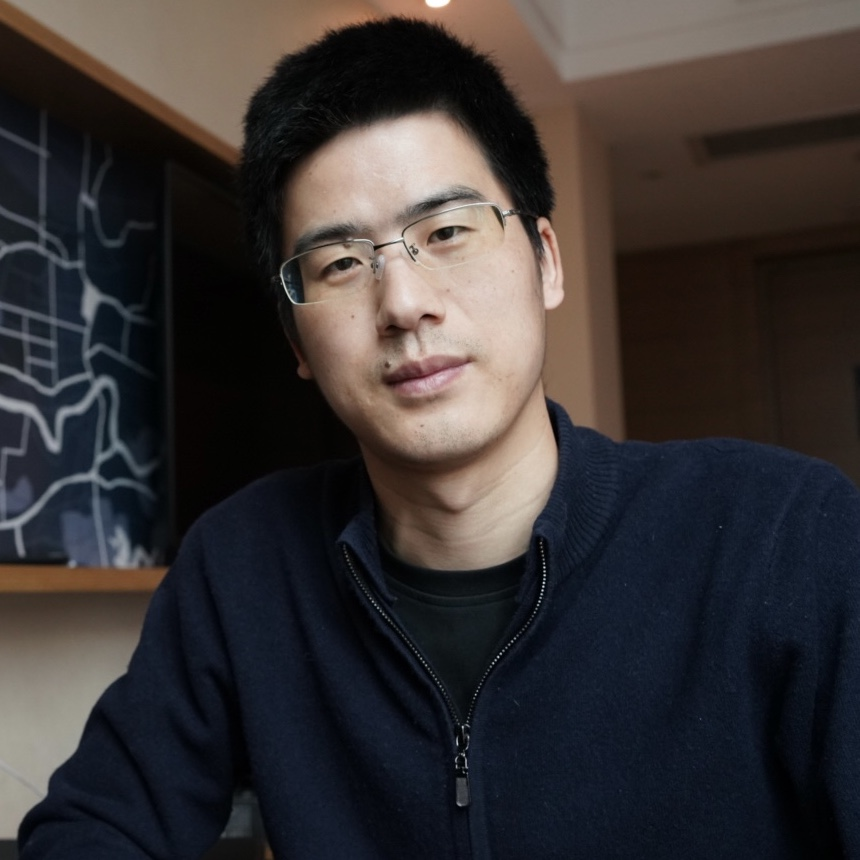

Hanqi Yan is an Assistant Professor at King’s College London, Department of Informatics. Before that, she was a postdoctoral researcher in the same department (2024-2025). She mainly focuses on interpretability and robustness for language models on various reasoning tasks, especially from the representation learning perspective. She has published more than 10+ papers as (co-)first author on related topics in top conferences, such as ACL, EMNLP, NeurIPS, ICML. She serves as area chair for ACL, EMNLP, EACL, NAACL. She has given tutorials on structural representation learning for for LLMs at AAAI26 and co-chaired the student workshop at AACL22. She obtained her Ph.D from the University of Warwick (2024), Msc and BEng from Peking University (2020) and Beihang University (2017).

More Details

- When: Wed 11 Feb 2026, at 1 - 2 pm (Brisbane time)

- Speaker: Prof Hanqi Yan (KCL)

- Host: Dr Ruihong Qiu

- Zoom: https://uqz.zoom.us/j/83908150532 [Recording]

Beyond Visual Geometry- Toward Physical 3D Reconstruction

Beyond Visual Geometry- Toward Physical 3D Reconstruction